Taking a Spark Profile

Taking a Spark Profile

What does the plugin do?

Spark is a performance profiling plugin that can display server information, such as TPS, memory, tick durations, CPU usage, and disk usage. But more importantly it can create a performance profile that can be viewed on a fully feature web interface. It does not require any configuration and is incredibly easy to install.

Setup

You can ignore this step if you are running Purpur 1.19.1 or above.

Paper 1.21+ includes Spark by default and you do not need to download Spark separately.

Download the latest build from Spark and drop it into your plugins (or mod folder if you are running Forge/Fabric) folder. Turn on or restart the server, and you're done! If you need help installing plugins, check How to install plugins.

Using The Profiler

Some versions of spark use different command roots, /sparkb for BungeeCord, /sparkv for Velocity, and /sparkc for Fabric/Forge. If you are having trouble running the command try using the corresponding subfix.

The most useful tool in terms of diagnostics is the profiler feature which can be run with /spark profiler start.

Usually you'll want to run the profile during a time of stress for your server, to get the most out of the report you should try to leave it running for 10 minutes or more. You can also add additional parameters to the report, for more information check the Spark Documentation

Once you are ready to check the results of the profiler you should run /spark profiler stop and copy the link produeced.

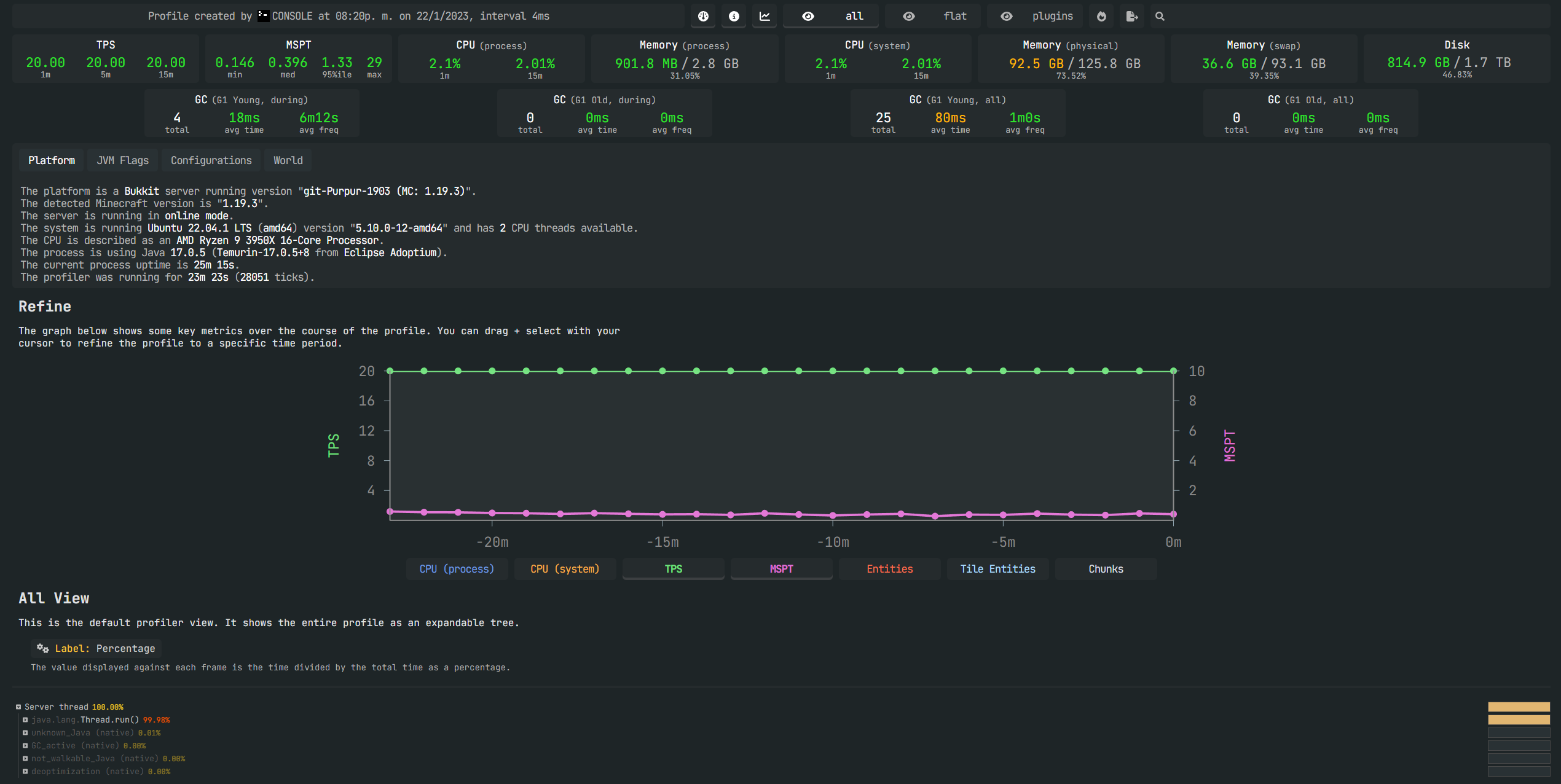

When you open the link you'll find a useful web interface that displays all the data collected.

Other Useful Commands

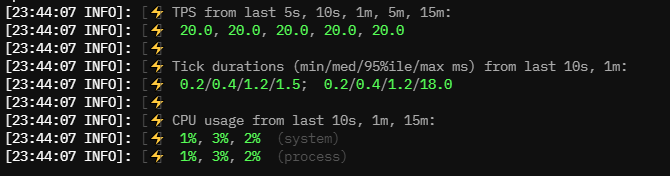

Running /tps will display an output that looks like this:

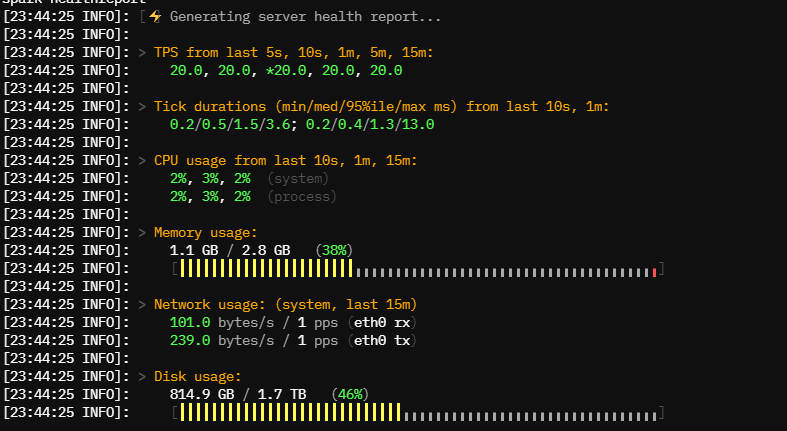

Running /spark healthreport will display an output that looks like this:

The values will depend on your server, newer versions of Spark will contain more useful information, as the utility is in active development.

For more parameters to use, and other useful commands you can check the Official Spark Documentation

Need Help Reading the Profile?

If you require help with reading your Spark profile, create a ticket in Bloom.host Discord!.